90 KiB

Computational Symbiosis: Methods That Meld Mind and Machine

Slides [5/5]

Introduction [3/3]

Spoken Intro B_note

Hello, everyone!

My name is Mike Gerwitz. I am a free software hacker and activist with a focus on user privacy and security. I'm also a GNU Maintainer and volunteer. I have about twenty years of programming experience, half of that professionally.

And I've been a computer user for longer. So I've been around long enough to see a decent evolution in how we interact with machines.

Choreographed Workflows B_fullframe

Choreographed Workflows

Notes B_noteNH

What we have tended toward over the years are interfaces that try to cater to as many people as possible by providing carefully choreographed workflows that think for you. And I don't deny that this has been a useful method for making computers accessible to huge numbers of people. But it's important to understand where this trend falls short.

Practical Freedom B_fullframe

Practical Freedom

Notes B_noteNH

This is a talk about practical freedoms—an issue separate from but requiring software freedom. If developers are thinking for us and guiding us in our computing, then we're limited to preconceived workflows. This leaves immense power in the hands of developers even if software is free, because average users are stuck asking them to implement changes, or footing the bill for someone else to do so.

My goal here is to blur those lines between ``user'' and ``programmer'' and show you how users can be empowered to take control of their computing in practical and powerful ways. But it does require a different way of thinking.

To begin, let's start by exploring something that I think most people can relate to using.

Practical Example: Web Browser [8/8]

Example: Web Browser B_frame

Notes B_noteNH

The only GUI I use on a day-to-day basis is my web browser. In this case, GNU Icecat, which is a Firefox derivative. This is a screenshot of an admittedly staged session, and contains a number of addons.

Finding Text (Mouse-Driven GUI Interaction) B_frame

Images B_columns

Left B_column

Right B_column

Notes B_noteNH

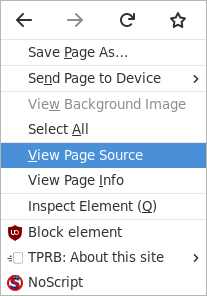

A common operation is to search for a word or phrase, like shown here.

Now, how exactly to do this with a mouse varies depending on what browser you're using, but here I highlighted the steps in a modern IceCat or Firefox. You start by clicking on the little hamburger, hotdog, or whatever-you-want-to-call-it menu in the upper-right, and then click on "Find in This Page" within the popup. This then opens a bar at the bottom of the page with an area to type the word or phrase you're searching for. It highlights and scrolls to the first match as you type, and has a button to highlight all results. It also shows the number of results off to the right. It's a simple, yet powerful mechanism that is pretty easy to use.

Is this an efficient means to communicate your intent? If you're just searching for a name or concept, sure, it's not so bad.

But notice how I had to convey these steps to you. I had to take screenshots and highlight where to click with the mouse. Since a GUI is inherently very visual, so are the instructions on how to use it. There is no canonical representation for these instructions, because it involves clicking on elements that have no clear name to the user.

GUIs Change Over Time B_frame

Images B_columns

Left B_column

Right B_column

Ctrl+F

Notes B_noteNH

Another difficult thing is: GUIs change over time. I'm sure many people here remember earlier versions of Firefox that didn't have the hamburger menu, where the Find menu option was in the Edit menu. Those old menus do still exist if you hit Alt. I miss the old menus. Saying "Go to Edit - Find" is pretty clear, and those menu positions were always in the same place across the entire desktop environment. Now individual programs may vary in the their user experience.

But do you notice something in common between these two screenshots?

There's something that hasn't changed over time—something

that has been the same for decades!

Ctrl+F.

When you type Ctrl+F,

it immediately opens that search bar and gives focus to the textbox,

so you can just start typing.

Muscle Memory B_fullframe

Muscle Memory

Visual ⇒ Tactile

Notes B_noteNH

With a mouse and a GUI, interaction is driven by visual indicators. The position of your hand on the mousepad or your fingers on a touchpad isn't meaningful, because your mouse cursor could be anywhere on the screen at any given time; your eyes provide the context.

But by hitting Ctrl+F,

we have switched our mode of interaction.

It's now tactile.

You associate a finger placement;

a motion;

and the feeling of the keys being pressed beneath your fingers

with an action—finding

something.

You develop muscle memory.

<Repeatedly make motion with hand and fingers like a madman during the above paragraph.>

A Research Task B_fullframe

Research Task:

Given a list of webpage URLs

find all that do not contain ``free software''

Notes B_noteNH

Let's explore a fairly simple research task together. Let's say I email you a handfull of URLs—maybe 5 or 10 of them—that are articles about software or technology. And I want you to come up with a list of the webpages that do not contain the phrase ``free software'' so that I can get a better idea of ones to focus my activism on.

How might we approach this problem as an average user?

Executing the Research Task B_frame

Approaches B_columns

Mouse B_column

Mouse

- Click `+' for each new tab, enter URL

- Menu → Find in This Page

- Type ``free software''

- If found, go to #9

- If not found, highlight URL, right-click, copy

- Click on text editor

- Right-click, paste URL, hit

RETfor newline - Click on web browser

- Click `X' on tab, go to #2

(Perhaps I should demonstrate this right away rather than reading through the list first, to save time?)

Let's first use the mouse as many users probably would. To set up, let's open each URL in a new tab. We click on the little `+' icon for a new tab and then enter the URL, once for each webpage, perhaps copying the URL from the email message. Once we're all set up, we don't care about the email anymore, but we need a place to store our results, so we open a text editor to paste URLs into.

Now, for each tab, we click on the little hamburger menu, click on ``Find in This Page'', and then type ``free software''. If we do not see a result, we move our mouse to the location bar, click on it to highlight the URL, right-click, copy, click on the text editor to give it focus, right-click on the editor, ``Paste'', and then hit the return key to move to the next line. We then go back to the web browser. If we do see a result, we skip copying over the URL. In either case, we then close the tab by clicking on the `X'.

And then we repeat this for each tab, until they have all been closed. When we're done, whatever is in our text editor is the list of URLs of webpages that do not reference ``free software'', and we're done.

Simple enough, right? But it's a bit of a pain in the ass. All this clicking around doesn't really feel like we're melding mind and machine, does it?

What if we used our Ctrl+F trick?

That saves us a couple clicks,

but can we do better?

Keyboard B_column

Keyboard

Ctrl+Tfor each new tab, enter URLCtrl+Fto find- Type ``free software''

- If found, go to #9

- If not found,

Ctrl+L Ctrl+Cto copy URL Alt+Tabto text editorCtrl+V RETto paste URL and add newlineAlt+Tabto web browserCtrl+Wto close tab, go to #2

Fortunately we have many more keybindings at our disposal!

We'll start with opening each new tab with Ctrl+T instead of clicking on

`+' with the mouse.

(Maybe show copying the URL from the email without the mouse?)

To open our text editor,

we'll use Alt+F4.

Once we're all set up,

we start with the first tab and use Ctrl+F as we've seen before,

and then type ``free software''.

If we do not find a match,

we're ready to copy the URL.

Hitting Ctrl+L will take us to the location bar and highlight the URL.

We can then hit Ctrl+C to copy the URL to the clipboard.

Alt+Tab to go to our text editor.

We then paste with Ctrl+V and hit return to insert a newline.

We can then go back to the web browser by hitting Alt+Tab again.

We then close the tab with Ctrl+W.

Repeat, and we're done all the same as before.

As a bonus,

save with Ctrl+S.

What's interesting about this approach is that we didn't have to use the mouse at all, unless maybe you used it to highlight the URL in the email. You could get into quite the rhythm with this approach, and your hands never have to leave the keyboard. This is a bit of a faster, more efficient way to convey our thoughts to the machine, right? We don't have to seek out our actions each time in the GUI—the operations are always at our fingertips, literally.

GUIs of a Feather B_fullframe

Same Keybindings Across (Most) GUIs!

Browser, Editor, Window Manager, OS, \ldots

Notes B_noteNH

Another powerful benefit of this approach is—these same exact keybindings work across most GUIs! If we switch out Icecat here with nearly any other major web browser, and switch out gedit with many other text editors or even word processors, this will work all the same!

If you use Windows instead of GNU/Linux—which I strongly discourage, but if you do—then it'll work the same.

Certain keybindings are ubiquitous—if

you hit Ctrl+F in most GUI programs that support searching,

you'll get some sort of context-specific search.

Let's look at those keybindings a bit more concisely, since that last slide was a bit of a mess.

Macro-Like Keyboard Instructions B_fullframe

Macro-Like

Ctrl+T ``https://...'' <N times>

Ctrl+F ``free sofware''

[ Ctrl+L Ctrl+C Alt+Tab Ctrl+V RET Alt+Tab ]

Ctrl+W

<N times>- <2> Requires visual inspection for conditional

- <2> Still manual and tedious—what if there were 1000 URLs?

Notes B_noteNH

If we type out the keybindings like this, it looks a bit more like instructions for the machine, doesn't it? Some of you may be familiar with macros—with the ability to record keypresses and play them back later. If we were able to do that, could we automate this task away?

Unfortunately…no. At least, not with the tools we're using right now. Why is that?

Well, for one, it requires visual inspection to determine whether or not a match has occurred. That drives conditional logic—that bracketed part there. We also need to know how many times to repeat, which requires that we either count or watch the progress. And we need to be able to inspect the email for URLs and copy them into the web browser.

This also scales really poorly. While using the keyboard is certainly faster than using the mouse, we're only dealing with a small set of URLs here. What if I gave you 100 of them? 1000? More? Suddenly this doesn't feel like a very efficient way to convey our intent to the machine. I don't wish that suffering upon anyone.

To get around that, we need to change how we think about our computing a bit.

A New Perspective [15/15]

Secrets? B_fullframe

Notes B_noteNH

So what if I told you that I could go grab some coffee and play with my kids and come back later to a list that has been generated for me by an automated process? And what if I told you that it'd only take a minute or two to for me to create this process? And that you don't need to be a programmer to do it?

Well if I told you that then you'd be pretty pissed at me for sending you 1000 URLs. So I would never do that.

This is where the whole concept of ``wizardry'' comes in. Some of you are sitting in the audience or watching this remotely rolling your eyes thinking ``oh this guy thinks he's so sweet'', because the answer is obvious to you. But to those of you who are confined to the toolset that I just demonstrated…it's not going to be obvious.

The problem is that there is a whole world and way of computing that is hidden from most users. And it's not hidden because it's a secret. It's because modern interfaces have come to completely mask it or provide alternatives to it that happen to be ``good enough'' for a particular use case.

But ``good enough'' is only good enough until it's not, until you need to do something outside of that preconceived workflow.

Lifting the Curtain B_frame

Columns B_columns

Left B_column

Right B_column

Notes B_noteNH

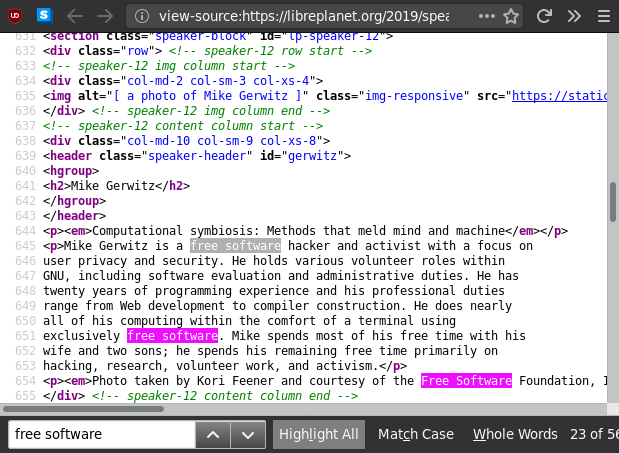

Let's lift the curtain, so to speak, on what's really going on in the web browser.

Right-click on the webpage and select "View Page Source" from the context menu. You get a new tab containing the raw code behind the document. Most of it, anyway. It is a document language called HTML. And as you may have noticed, it's plain text. Structured, but plain, text.

And as you can see,

if we hit Ctrl+F,

``free software'' is there all the same.

We don't need to view the webpage with all its fancy formatting.

For the problem we're trying to solve,

the graphical representation provides little benefit.

Text B_fullframe

Text.

Notes B_noteNH

As we're about to see, this simple fact—that that the webpage is represented by plain text—opens up a whole new world to us. We have stripped away all the complex visual GUI stuff and we're left with the raw substance of the page which still contains the information that we are looking for.

But we're still within the web browser.

We don't have to be.

We can copy all of that text and paste it into our editor.

Ctrl+A Ctrl+C Alt-Tab Ctrl+V.

And sure enough,

search works all the same.

Ctrl+F and we can still find ``free software''.

Completely different program,

and we can still find the text using the same keybinding.

Text is a Universal Interface B_fullframe

Text is a Universal Interface

Notes B_noteNH

Text is a universal interface. And what I mean by that is—you don't need any special tools to work with it. You can view it in your web browser. You can view it in your text editor. You can paste it in a text message. You can print it in a book. You can write it down on a paper and type it back into your computer.

Text is how we communicate with one-another as human beings.

Let's save this HTML as a file,

speakers.html.

If we opened this file, it would open in our web browser and we would see the same webpage, although it would look a bit different since a lot of the styling is stored outside of this HTML file.

But if again we opened this HTML file in our text editor, you would see that same plain text HTML as before; one program just chooses to render it differently than another.

Even though we can view the HTML in our text editor, we haven't yet eliminated the web browser; we still need it to navigate to the webpage and view its source. But if that's all we're using the web browser for, then it's one hell of an inefficient way of telling the computer that we just want the HTML document at a certain URL.

Up until this point, the keyboard has been used as a secondary interface—as an alternative to something. Now we're going to venture into a world where it is the interface.

The Shell Command Prompt B_frame

mikegerwitz@lp2019-laptop:~$

# ^ user ^ host ^ working directory (home)This presentation will show:

$ command

output line 1

output line 2

...

output line NNotes B_noteNH

If you open a VTE, or virtual terminal emulator, you will be greeted with a curious string of characters. This is a command prompt.

The program that is prompting you for a command is called the shell.

The GNU shell is bash,

which is the default on most GNU/Linux systems.

Since we're talking about freedom in your computing, I hope that you'll try bash on an operating system that respects your freedom to use, study, modify, and share its software, like a GNU/Linux distribution. This laptop here runs Trisquel.

Bash is also the default on Mac OSX, but the rest of the operating system is non-free; you have no freedom there. And Windows now has something they call ``Bash on Ubuntu on Windows'', which just is GNU running atop of the proprietary Windows kernel. You can do better that that!

When I present commands here, the command line we are executing is prefixed with a dollar sign, and the output immediately follows it, like so.

Eliminating the Web Browser B_frame

$ wget https://libreplanet.org/2019/speakers/--2019-03-24 00:00:00-- https://libreplanet.org/2019/speakers/

Resolving libreplanet.org (libreplanet.org)... 209.51.188.248

Connecting to libreplanet.org (libreplanet.org)|209.51.188.248|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: unspecified [text/html]

Saving to: ‘index.html’

...

2019-03-24 00:00:00 (1.78 MB/s) - ‘index.html’ saved [67789]$ wget -O speakers.html \

https://libreplanet.org/2019/speakers/Notes B_noteNH

Alright! The goal is to retrieve the HTML file at a given URL.

GNU/Linux distributions usually come with GNU wget,

which does precisely that.

To invoke it,

we type the name of the command,

followed by a space,

followed by the URL we wish to retrieve,

and then hit enter.

What follows is quite a bit of text.

The details aren't particularly important as long as it's successful,

but notice that it says it saved to index.html.

That's not intuitive to those who don't understand why that name was used.

So let's tell wget what file we want to output to.

We do this with the O option,

like so.

It takes a single argument,

which is the name of the output file.

The backslash here allows us to continue the command onto the next line;

otherwise, a newline tells the shell to execute the command.

So remember previously that we manually created speakers.html by viewing

the source of the webpage in a web browser and saving it.

If we open this file,

we'll find that it contains exactly the same text as when we manually

did it,

and we never had to open a web browser.

And we can search it all the same as before for ``free software''.

Browser vs. wget Comparison B_frame

Ctrl+L ``https://libreplanet.org/2019/speakers/''$ wget https://libreplanet.org/2019/speakers/Notes B_noteNH

This is a very different means of interacting with the computer, but if we compare this with the keyboard shortcut used previously, they are very similar. Not so scary, right? It's hard to imagine a more direct line of communication with the computer for downloading a webpage, short of reading your mind.

Finding Text on the Command Line B_frame

$ grep 'free software' speakers.html\vdots

<p>Mike Gerwitz is a free software hacker and activist with a focus on

exclusively free software. Mike spends most of his free time with his\vdots

Notes B_noteNH

Not having to open a web browser is nice, but having to open the downloaded HTML file just to search it is a bit of a pain; is there a command that can help us there too?

We want to know whether a page contains the term ``free software''.

For that we use a tool called grep.

The first argument to grep is the search string,

and the remaining arguments—just one here—tell it where it should

search.

The first argument to grep is quoted because it contains a space.

You'll get a bunch of output; I just included a small snippet here. But notice how it happens to include exactly the text we were looking at in the web browser.

And with that we have replicated Ctrl+F.

But did we do a good job conveying our thoughts to the machine?

We just wanted to know whether the page contains the phrase, but we don't care to see it! So while we have efficiently conveyed a search string, we didn't receive an efficient reply—it's information overload.

A More Gentle Reply B_frame

$ grep --quiet 'free software' speakers.html && echo yes

yes$ echo 'Hello, world!'

Hello, world!$ grep --quiet 'open source' speakers.html || echo no

noNotes B_noteNH

First we tell grep to modify its behavior with the quiet flag.

You can also use the short form,

which is just -q.

Rather than outputting results,

grep will exit silently and it will instead return a status to the shell

that says whether or not the search failed.

POSIX-like shells, like Bash, offer the ability to say ``run this next command if the previous succeeds'', and this is done by putting two ampersands between the commands.

The command to run if grep succeeds in finding a match is echo.

All echo does is takes its arguments and spits them right back out again as

output.

So this essentially states:

``search for `free software' in speakers.html and output `yes' if it is

found''.

Since echo is its own command,

it also works by itself.

Here's the classic ``hello, world'' program in shell.

But if you recall our research task, it was to search for pages that do not contain the term ``free software''. We can do that too, by using two pipes in place of two ampersands.

Writing to Files (Redirection) B_frame

- Commands write to standard out (stdout) by default

- Output redirection writes somewhere else

# overwrites each time

$ echo 'Hello, world!' > hello.txt

$ echo 'Hello again, world!' > hello.txt

# appends (echo adds a newline)

$ echo 'First line' >> results.txt

$ echo 'Second line' >> results.txtNotes B_noteNH

Alright, we're well on our way now! But we still haven't gotten rid of that damn text editor, because we need to save a list of URLs to a file to hold our final results!

Well as it so happens, writing to a file is such a common operation that it's built right into the shell. We use a feature called redirection.

There are two types of output redirection.

If you place a single greater-than symbol followed by a filename after a

command,

then the output of that command will replace anything already in the

file.

So the result of the first two commands will be a hello.txt that contains

only a single line:

``Hello again, world!''.

The second type,

which uses two greater-than symbols,

appends to the file.

echo by default adds a newline,

so the result of the second two commands is a results.txt containing two

lines,

``First line'' and ``Second line'' respectively.

If the file doesn't yet exist,

it will be created.

Starting Our List B_fullframe

$ wget --quiet -O speakers.html \

https://libreplanet.org/2019/speakers/ \

&& grep --quiet 'free software' speakers.html \

|| echo https://libreplanet.org/2019/speakers/ \

>> results.txtNotes B_noteNH

Take a look at that for a moment. <pause ~5s> Can anyone tell me in just one sentence what the result of this command line will be? <pause ~5s> <react appropriately>

As exciting as it is to start to bring these things together,

the result is pretty anti-climatic—we

know that speakers.html does contain the string ``free software'',

and so the result is that results.txt contains nothing!

In fact,

if results.txt didn't exist yet,

it still wouldn't even exist.

At this point, we have successfully eliminated both the web browser and text editor. But this is a hefty command to have to modify each time we want to try a different URL.

Command Refactoring B_fullframe

# original:

$ wget --quiet -O speakers.html \

https://libreplanet.org/2019/speakers/ \

&& grep --quiet 'free software' speakers.html \

|| echo https://libreplanet.org/2019/speakers/ \

>> results.txt⊂skip

$ URL=https://libreplanet.org/2019/speakers/

$ wget --quiet -O speakers.html \

"$URL" \

&& grep --quiet 'free software' speakers.html \

|| echo "$URL" \

>> results.txt

$ URL=https://libreplanet.org/2019/speakers/

$ wget -qO speakers.html \

"$URL" \

&& grep -q 'free software' speakers.html \

|| echo "$URL" \

>> results.txt

$ URL=https://libreplanet.org/2019/speakers/

$ wget -qO - \

"$URL" \

| grep -q 'free software' \

|| echo "$URL" \

>> results.txt

$ URL=https://libreplanet.org/2019/speakers/

$ wget -qO - "$URL" \

| grep -q 'free software' || echo "$URL" >> results.txt$ alias fetch-url='wget -qO-'

$ URL=https://libreplanet.org/2019/speakers/

$ fetch-url "$URL" \

| grep -q 'free software' || echo "$URL" >> results.txtNotes B_noteNH

We can simplify it by introducing a variable. First we assign the URL to a variable named URL. We then reference its value by prefixing it with a dollar sign everywhere the URL previously appeared. We put it in quotes just in case it contains special characters or whitespace.

We can also make this command line a bit more concise by using the short

name for the --quiet flag,

which is -q.

Notice how in wget I combined them into -qO instead of using two

separate dashes with spaces between them,

but you don't have to do this.

Something else feels dirty.

We're creating this speakers.html file just to pass to grep.

It's not needed after the fact.

In fact,

it's just polluting our filesystem.

What if we didn't have to create it at all to begin with?

I'm first going to introduce the notation, and then I'll go into why it works.

If we replace the output file speakers.html with a single dash,

that tells wget to write to standard out.

This is normally the default behavior of command line programs,

like grep and echo,

but wget is a bit different.

We then omit the speakers.html from grep entirely.

grep will read from standard in by default.

We then connect standard out of wget to the standard in of grep using a

single pipe, not double;

this is called a pipeline.

Now that we've freed up some characters,

let's reformat this slightly to be a bit more readable.

And that wget command looks a bit cryptic.

How about we define an alias so that it looks a bit more friendly,

and then we can stop worrying about what it does?

Now here's the original command we started with, and where we're at now.

This little bit of abstraction has made our intent even more clear. It can now clearly be read that we're defining a URL, retrieving that URL, searching for a term, and then appending it to a file on a non-match.

By the way, for privacy reasons, you can prefix `wget` with `torify` to run it through Tor, if you have it installed.

But before we keep going, I want to go back to a point I mentioned previously.

Again: Text is a Universal Interface B_againframe

Notes B_noteNH

Text is a universal interface.

Notice how we started out our journey manually inspecting text, and began replacing the human part of the workflow at each step with a command. That's because text is something that both humans and computers can work with easily.

This is a fundamental design principle in the Unix tools that I have begun to present to you.

Pipelines B_fullframe

``Expect the output of every program to become the input to another''

—Doug McIlroy (1978)

Notes B_noteNH

The invention of the Unix pipe is credited to Doug McIlroy. As part of the Unix philosophy, he stated: ``expect the output of every program to become the input to another''.

More broadly, the Unix philosophy can be summarized as:

Summary of the Unix Philosophy B_fullframe

The Unix Philosophy

This is the Unix philosophy: Write programs that do one thing and do it well. Write programs to work together. Write programs to handle text streams, because that is a universal interface.

Notes B_noteNH

<Read it>

Up until this point, we have changed how we communicate with the machine by moving away from a visual interface driven primarily by movement, to a textual interface that puts mind and machine on equal footing. And now here we're talking about another profound shift in how we think.

We start to think of how to decompose problems into small operations that exist as part of a larger pipeline. We think of how to chain small, specialized programs together, transforming text at each step to make it more suitable for the next.

Program Composition [7/7]

LP Sessions B_fullframe

$ fetch-url https://libreplanet.org/2019/speakers/ \

| grep -A5 speaker-header \

| head -n14

<header class="keynote-speaker-header" id="garbee">

<hgroup>

<h2>Bdale Garbee</h2>

</hgroup>

</header>

<p><em>Closing keynote</em></p>

--

<header class="keynote-speaker-header" id="loubani">

<hgroup>

<h2>Tarek Loubani</h2>

</hgroup>

</header>

<p><em>Opening keynote (Day 1)</em></p>

--$ fetch-url https://libreplanet.org/2019/speakers/ \

| grep -A5 speaker-header \

| grep '<em>'

<p><em>Closing keynote</em></p>

<p><em>Opening keynote (Day 1)</em></p>

<p><em>Opening keynote (Day 2)</em></p>

[...]

<p><em>The Tor Project: State of the Onion</em> and <em>Library Freedom Institute: A new hope</em></p>

<p><em>The Tor Project: State of the Onion</em></p>

[...]

<p><em>Large-scale collaboration with free software</em></p>

<p><em>Large-scale collaboration with free software</em></p>$ fetch-url https://libreplanet.org/2019/speakers/ \

| grep -A5 speaker-header \

| grep -o '<em>[^<]\+</em>'

<em>Closing keynote</em>

<em>Opening keynote (Day 1)</em>

<em>Opening keynote (Day 2)</em>

[...]

<em>The Tor Project: State of the Onion</em>

<em>Library Freedom Institute: A new hope</em>

<em>The Tor Project: State of the Onion</em>

[...]

<em>Large-scale collaboration with free software</em>

<em>Large-scale collaboration with free software</em>$ fetch-url https://libreplanet.org/2019/speakers/ \

| grep -A5 speaker-header \

| grep -o '<em>[^<]\+</em>' \

| sort \

| uniq -cd

2 <em>Hackerspace Rancho Electrónico</em>

4 <em>Large-scale collaboration with free software</em>

2 <em>Library Freedom Institute: A new hope</em>

2 <em>Right to Repair and the DMCA</em>

2 <em>Teaching privacy and security via free software</em>

2 <em>The joy of bug reporting</em>

5 <em>The Tor Project: State of the Onion</em>$ fetch-url https://libreplanet.org/2019/speakers/ \

| grep -A5 speaker-header \

| grep -o '<em>[^<]\+</em>' \

| sort \

| uniq -cd \

| sort -nr \

| head -n5

5 <em>The Tor Project: State of the Onion</em>

4 <em>Large-scale collaboration with free software</em>

2 <em>The joy of bug reporting</em>

2 <em>Teaching privacy and security via free software</em>

2 <em>Right to Repair and the DMCA</em>$ fetch-url https://libreplanet.org/2019/speakers/ \

| grep -A5 speaker-header \

| grep -o '<em>[^<]\+</em>' \

| sort \

| uniq -cd \

| sort -nr \

| head -n5 \

| sed 's#^ *\(.\+\) <em>\(.*\)</em>#\2 has \1 speakers#'

The Tor Project: State of the Onion has 5 speakers

Large-scale collaboration with free software has 4 speakers

The joy of bug reporting has 2 speakers

Teaching privacy and security via free software has 2 speakers

Right to Repair and the DMCA has 2 speakers$ fetch-url https://libreplanet.org/2019/speakers/ \

| grep -A5 speaker-header \

| grep -o '<em>[^<]\+</em>' \

| sort \

| uniq -cd \

| sort -nr \

| head -n5 \

| sed 's#^ *\(.\+\) <em>\(.*\)</em>#\2 has \1 speakers#'

| espeakNotes B_noteNH

Let's look at a more sophisticated pipeline with another practical example.

I noticed that some LibrePlanet sessions had multiple speakers, and I wanted to know which ones had the most speakers.

The HTML of the speakers page includes a header for each speaker.

Here are the first two.

-A5 tells grep to also output the five lines following matching lines,

and head -n14 outputs only the first fourteen lines of output.

We're only interested in the talk titles, though.

Looking at this output,

we see that the titles have an em tag,

so let's just go with that.

Pipe to grep instead of head.

It looks like at least one of those results has multiple talks,

if you can see the ``and'' there.

But note that each is enclosed in its own set of em tags.

If we add -o to grep,

which stands for only,

then it'll only return the portion of the line that matches,

rather than the entire line.

Further,

if there are multiple matches on a line,

it'll output each match independently on its own line.

That's exactly what we want!

But we have to modify our regex a little bit to prevent it from grabbing

everything between the first and last em tag.

Don't worry if you don't understand the regular expression;

they take time to learn and tend to be easier to write than they are to

read.

This one just says ``match one or more non-less-than characters between em

tags''.

Now assuming that the talk titles are consistent,

we can get a count.

uniq has the ability to count consecutive lines that are identical,

as well as output a count.

We also use -d to tell it to only output duplicate lines.

But uniq doesn't sort lines before processing,

so we first pipe it to sort.

That gives us a count of each talk!

But I want to know the talks with the most speakers, so let's sort it again, this time numerically and in reverse order, and take the top five.

And we have our answer!

But just for the hell of it,

let's go a step further.

Using sed,

which stands for stream editor,

we can match on portions of the input and reference those matches in a

replacement.

So we can reformat the uniq output into an English sentence,

like so.

I chose the pound characters delimit the match from the replacement.

The numbers in the replacement reference the parenthesized groups in the

match.

And then we're going to pipe it to the program espeak,

which is a text-to-speech synthesizer.

Your computer will speak the top five talks by presenter count to you.

Listening to computers speak is all the rage right now,

right?

Interactive, Incremental, Iterative Development B_fullframe

Incremental Development

Interactive REPL, Iterative Decomposition

Notes B_noteNH

Notice how we approached that problem.

I presented it here just as I developed it.

I didn't open my web browser and inspect the HTML;

I just looked at the wget output and then started to manipulate it in

useful ways working toward my final goal.

This is just one of the many ways to write it.

And this is part of what makes working in a shell so powerful.

In software development, we call environments like this REPLs, which stands for ``read-eval-print loop''. The shell reads a command line, evaluates it, prints a result, and then does that all over again. As a hacker, this allows me to easily inspect and iterate on my script in real time, which can be a very efficient process. I can quickly prototype something and then clean it up later. Or maybe create a proof-of-concept in shell before writing the actual implementation in another language.

But most users aren't programmers. They aren't experts in these commands; they have to play around and discover as they go. And the shell is perfect for this discovery. If something doesn't work, just keep trying different things and get immediate feedback!

And because we're working with text as data, a human can replace any part of this process!

Discovering URLs B_fullframe

$ grep -o 'https\?://[^ ]\+' email-of-links.txt

https://en.wikipedia.org/wiki/Free_software

https://en.wikipedia.org/wiki/Open_source

https://en.wikipedia.org/wiki/Microsoft

https://opensource.org/about

$ grep -o 'https\?://[^ ]\+' email-of-links.txt \

| while read URL; do

echo "URL is $URL"

done

URL is https://en.wikipedia.org/wiki/Free_software

URL is https://en.wikipedia.org/wiki/Open_source

URL is https://en.wikipedia.org/wiki/Microsoft

URL is https://opensource.org/about

$ grep -o 'https\?://[^ ]\+' email-of-links.txt \

| while read URL; do \

fetch-url "$URL" | grep -q 'free software' \

|| echo "$URL \

done \

> results.txt

$ grep -o 'https\?://[^ ]\+' email-of-links.txt \

| while read URL; do \

fetch-url "$URL" | grep -q 'free software' \

|| echo "$URL" \

done \

| tee results.txt

https://en.wikipedia.org/wiki/Microsoft

https://opensource.org/about

$ grep -o 'https\?://[^ ]\+' email-of-links.txt \

| while read URL; do \

fetch-url "$URL" | grep -q 'free software' \

|| echo "$URL" \

done \

| tee results.txt

| xclip -i -selection clipboard

$ grep -o 'https\?://[^ ]\+' email-of-links.txt \

| while read URL; do \

fetch-url "$URL" | grep -q 'free software' \

|| echo "$URL" \

done \

| tee >( xclip -i -selection clipboard )

https://en.wikipedia.org/wiki/Microsoft

https://opensource.org/about$ xclip -o -selection clipboard \

| grep -o 'https\?://[^ ]\+' \

| while read URL; do \

fetch-url "$URL" | grep -q 'free software' \

|| echo "$URL" \

done \

| tee >( xclip -i -selection clipboard )

https://en.wikipedia.org/wiki/Microsoft

https://opensource.org/about$ xclip -o -selection clipboard \

| grep -o 'https\?://[^ ]\+' \

| while read URL; do \

fetch-url "$URL" | grep -q 'free software' \

|| echo "$URL" \

done \

| tee results.txt

https://en.wikipedia.org/wiki/Microsoft

https://opensource.org/about

$ xclip -i -selection clipboard < results.txtNotes B_noteNH

Okay, back to searching webpages.

Now that we have a means of creating the list of results,

how do we feed the URLs into our pipeline?

Why not pull them right out of the email with grep?

Let's say you saved the email in email-of-links.txt.

This simple regex should grab most URLs,

but it's far from perfect.

For example,

it'd grab punctuation at the end of a sentence.

But we're assuming a list of URLs.

Here's some example output with a few.

For each of these,

we need to run our pipeline.

It's time to introduce while and read.

while will continue to execute its body in a loop until its command fails.

read will read line-by-line into one or more variables,

and will fail when there are no more lines to read.

So if we insert our fetch-url pipeline into the body,

we get this.

For convenience,

let's use tee,

which is named for a pipe tee;

it'll send output through the pipeline while also writing the same

output to a given file.

So now we can both observe the results and have them written to a file!

But we were just going to reply to an email with those results.

Let's assume we're still using a GUI email client.

Wouldn't it be convenient if those results were already on the clipboard for

us so we can just paste them into the message?

We can accomplish that by piping to xclip as shown here.

Ah, crap, but now we can't see the output again.

Do we really need the results file anymore?

Rather than outputting to a file on disk with tee,

we're going to use a special notation that tells bash to invoke a command

in a subshell and replace that portion of the command line with a path

to a virtual file representing the standard input of that subshell.

This is a bash-specific feature.

Now we can see the output again!

Well,

if we're writing to the clipboard,

why don't we just read from it too?

Instead of saving our mail to a file,

we can just copy the relevant portion and have that piped directly to

grep!

If you have a list of URLs and you just copy that portion,

then you can just get rid of grep entirely.

What if you wrote to results.txt and later decided that you want to copy

the results to the clipboard?

We can do that too by reading results.txt in place of standard input to

xclip,

as shown here.

Phew!

Go Grab a Coffee B_fullframe

Go Grab a Coffee

Notes B_noteNH

Remember when I said I could go grab a coffee and play with the kids while the script did its thing? Well now's that time.

But grabbing a coffee means that this system is a bottleneck. The Internet is fast nowadays; ideally, we wouldn't have to wait long. Can we do better?

Async Processes B_fullframe

$ sleep 1 && echo done & echo start

start

done(Don't do this for large numbers of URLs!)

$ while read URL; do

fetch-url "$URL" | grep -q 'free software' \

|| echo "$URL" &

done | tee results.txtNotes B_noteNH

What if we could query multiple URLs in parallel?

Shells have built-in support for backgrounding tasks so that they can run

while you do other things;

all you have to do is place a single ampersand at the end of a command.

So in this example,

we sleep for one second and then echo ``done''.

But that sleep and subsequent echo is put into the background,

and the shell proceeds to execute echo start while sleep is running in

the background.

One second later,

it outputs ``done''.

So here's the loop we were just writing. If we add an ampersand at the end of that pipeline, it'll run in the background and immediately proceed to the next URL, executing the loop again.

But there's a problem with this approach. Sure, it's fine if we only have a few URLs. But what if we have 1000? That isn't efficient, and it's a bit rude to server administrators.

Executable Shell Script and Concurrency B_fullframe

url-grep B_block

#!/bin/bash

search=$1

url=$2

wget -qO- "$url" \

| grep -q "$search" || echo "$url"Execute B_ignoreheading

$ chmod +x url-grep$ while read URL; do

./url-grep 'free software' "$URL" >> results.txt

done$ xargs -n1 ./url-grep 'free software' > results.txt$ xargs -n1 -P5 ./url-grep 'free software' > results.txt

# ^ 5 concurrent processesNotes B_noteNH

Before we continue, we're going to have to write our pipeline in a way that other programs can run it. Up to this point, the program has just been embedded within an interactive shell session. One of the nice things about shell is that you can take what you entered onto the command line and paste it directly into a file and, with some minor exceptions, it'll work all the same.

Let's take our pipeline and name it url-grep.

Aliases only work in interactive sessions by default,

so we're going to just type wget directly here.

Alternatively,

you can define a function.

We use the positional parameters 1 and 2 here to represent the

respective arguments to the url-grep command,

and assign them to new variables for clarity.

The comment at the top of the file is called a ``shebang''. This is used by the kernel so that it knows what interpreter to use to run our program.

To make it executable,

we use chmod to set the executable bits on the file.

We can then invoke it as if it were an executable.

You actually don't have to do this;

you can call bash url-grep instead of making it executable,

and then you don't need the shebang either.

Now we replace the while loop with xargs.

It takes values from standard in and appends them as arguments to the

provided command line.

We specify -n1 to say that only one argument should be read from stdin

for any invocation of the command;

that makes it run a new command for every line of input.

Otherwise it'd just append N URLs as N arguments.

And now we can simply use -P to tell it how many processes to use at once.

Here we specify 5,

meaning xargs will run five processes at a time.

You can change that to whatever number makes sense for you.

Execution Time B_frame

$ wc -l url-list

1000

$ time xargs -n1 -P10 ./url-grep 'free software' < url-list

real 0m17.548s

user 0m8.283s

sys 0m4.877sNotes B_noteNH

So how long does it take to run?

I took a few URLs and just repeated them in a file so that I had 1000 of

them.

Running the xargs command,

it finishes in under 18 seconds on my system at home.

Obviously YMMV,

and certain sites may be slower to respond than others.

If you were to write this from scratch knowing what you know now, then in just a few minutes, the task will have been automated away and completed, all by gluing together existing programs.

You don't need to be a programmer to know how to do this; you just need to be familiar with the tools and know what's possible, which comes with a little bit of practice. It can certainly be daunting at first. But I hope that by walking you through these examples and showing you how to construct them step-by-step, it helps to demystify them and show that there is no wizardry involved.

We've come a long way from using the web browser and a mouse.

Now that we've finally completed our research task, let's look at a few more examples.

More Examples [8/8]

Resize Images B_frame

$ for img in *.png; do

convert "$img" -resize 50% "sm-$img"

done

# nested directories

$ find . -name '*.png' -exec convert {} -resize 50% sm-{} \;Notes B_noteNH

This example will be useful to people who have a lot of images that the want

to perform an operation on.

ImageMagick has a convert tool which can do a huge variety of image

manipulations that you would expect to have to use, say, GIMP for.

In this case,

we're just using one of the simples ones to reduce the image size by 50%.

The first example uses globbing to find all PNG images in the current

directory.

The second example uses the find command and searches all child

directories as well.

Both examples produce a new set of images prefixed with sm-.

Password Generation B_frame

# generate a random 32-character password

$ tr -cd '[:graph:]' < /dev/urandom | head -c32

`TB~cmJQ1%S8&tJ,%FoD54}"Fm4}\Iwi

# generate passphrase from EFF's large dice wordlist

# (https://www.eff.org/dice)

$ cut -f2 eff_large_wordlist.txt \

| sort -R --random-source=/dev/urandom \

| head -n6 \

| tr '\n' ' '

oppressor roman jigsaw unhappy thinning grievanceNotes B_noteNH

How about password generation?

/dev/urandom is a stream of random binary data.

We can use tr to delete everything that is not a printable character by

taking the complement of graph.

That type of password is useful if you have a password manager,

but it's not useful if you need to memorize it.

When memorization is needed,

a passphrase may be a better option.

A common way to generate those is to use a large list of memorable words and

choose them at random.

Diceware is one such system,

and the EFF has its own word list.

But we don't need physical dice when we can just use sort to randomly

permute the word list.

The EFF recommends taking at least six words,

which is what I did here.

This one is particularly memorable and morbid-sounding.

I'm a little upset that I put it in the slide instead of using it!

Password Manager B_frame

$ gpg --decrypt password-db.gpg | head -n3

https://foo.com

user mikegerwitz

pass !({:pT6DcJG.cr&OAco_EC63r_*xg|uD$ gpg --decrypt password-db.gpg \

| grep -A2 ^https://foo.com \

| tail -n2 \

| while read key value; do

echo "paste $key..."

printf %s "$value" \

| xclip -o -selection clipboard -l 1 -quiet

doneNotes B_noteNH

Speaking of password managers. We can create a pretty decent rather quickly. Let's say we have a password list encrypted using GnuPG, which can be decrypted like so. Each account has a URL, username, and password.

We pipe the output to grep to find the one we're looking for.

The caret anchors the match to the beginning of the line.

We then use tail to keep only the last two lines,

discarding the URL.

Here we see read with more than one variable.

For each line,

we read the first word into key and the rest of the line into value.

This is where it gets a bit more interesting.

We don't want to actually output our password anywhere where others could

see it or where it may be vulnerable to a side channel like Van Eck

phreaking.

Instead,

we're going to copy the value to the clipboard.

Here we use printf instead of echo because of some technical details I

don't have time to get into here,

but it has to do with echo option parsing.

(If you're reading this: imagine what happens if the password is ``-n foo'', for example.)

But the clipboard has its own risks too.

What if there's a malicious program monitoring the clipboard for passwords?

I used -l 1 with xclip.

-l stands for ``loop'',

which is the number of times to serve paste requests.

Normally xclip goes into the background and acts as a server.

-quiet keeps xclip in the foreground.

So if we see that the script moves on to request pasting the next value

before we've actually pasted it,

then we'll be made aware that something is wrong and perhaps we should

change our account information.

Another nice consequence is that the first value you paste will be the username, and the second value will be the password. And then the script will exit. Very convenient!

Since the decrypted data exist only as part of the pipeline, the decrypted passwords are only kept plaintext in memory for the duration of the script.

Remote Password Manager With 2FA B_frame

- Add

extra-socketto.gnupg/gpg-agent.conf - Add

RemoteForwardin.ssh/configfor host - Save script on previous slide as

get-passwd

$ ssh -Y mikegerwitz-pc get-passwd https://foo.comNotes B_noteNH

But what if you need passwords on multiple devices? Well, then you have to worry about how to keep them in sync. It also means that, if you are travelling and asked to decrypt files on your laptop, you're put in a tough spot.

Instead,

let's make that command line from the previous slide a script called

get-passwd,

and have it take the URL as an argument.

We can then access it remotely over SSH.

But to have clipboard access,

we need to forward our X11 session,

which is what -Y does.

But that's still not good enough. What if my master password is compromised—the password for my database? I'd rather have two-factor authentication. I asymmetrically encrypt my password database using a private key that's stored on my Nitrokey, which is a smart card—keys cannot be extracted from it, unless there's a vulnerabilty of course. To unlock the card, I must enter a PIN. So that's both something I know and something I have. If you enter the PIN incorrectly three times, the PIN needs to be reset with an administrative password. If you get that wrong three times, the device bricks itself. And this all works over SSH too.

Asymmetic encryption is also nice for shared passwords. I share a small password database with my wife, for example.

Encrypting long-term secrets with asymmetric keys isn't a great idea, so there's a tradeoff. I choose to accept it, because passwords aren't long-term secrets—I can easily change them. But you could easily do both— first require decryption with your smart card and then enter a passphrase for a symmetric key.

Taking Screenshots B_frame

# draw region on screen, output to ss.png

$ import ss.png

# screenshot of entire screen after 5 seconds

$ import -pause 5 -window root ss.png

# screenshot to clipboard

import png:- | xclip -i -selection clipboard -t image/pngNotes B_noteNH

Okay,

back to something less serious.

ImageMagick also comes with a vaguely-named utility called import,

which can capture images from an X server.

Screenshots.

If you run it with just a filename, then it'll change your cursor to a cross. You can drag to define a rectangular region to copy, or you can just click on a window to take a screenshot of the whole thing.

The bottom example avoids outputting to a file entirely and instead feeds

the raw image data to standard out.

We then pipe that to xclip to copy it to the clipboard.

Notice the new image/png type there.

Now you can paste the image into other programs,

like image editors or websites.

Screenshot OCR B_frame

$ import png:- | tesseract -psm 3 - - | cowsay

__________________________________

/ Keynote Speakers \

| |

| i |

| |

| Bdale Garbee Micky Metts Richard |

\ Stallman Tarek Loubani /

----------------------------------

\ ^__^

\ (oo)\_______

(__)\ )\/\

||----w |

|| ||# OCR at URL

$ wget -qO- https://.../keynote_banner.png \

| tesseract -psm 3 - -

# perform OCR on selected region and speak it

$ import png:- | tesseract -psm 3 - - | espeak

# perform OCR on clipboard image and show in dialog

$ xclip -o -selection clipboard -t image/png \

| tesseract -psm 3 - - \

| xargs -0 zenity --info --textNotes B_noteNH

Since import lets us select a region of the screen,

what if we used that to create a script that runs OCR on whatever we

select?

And just for fun,

what if the translation was presented to us by a cow?

tesseract is a free (as in freedom) optical character recognition

program.

In this example, I selected the keynote speaker image on the LP2019 website. It worked quite well.

But a cow isn't practical. Instead, you can imagine maybe binding this to a key combination in your window manager. In that case, maybe it'd be more convenient if it was spoken to you, like the second example. Or maybe copied to your clipboard.

In the third example,

we take an image off of the clipboard,

run OCR,

and then display the resulting text in a GUI dialog with zenity.

I really like these examples, because to an average user, all of this seems somewhat novel. And yet, it's one of the simplest examples we've done!

YMMV though with tesseract, depending on your settings and training data.

Full Circle B_fullframe

$ xdotool search --name ' GNU IceCat$' windowactivate --sync \

windowsize 1024 600 \

key ctrl+t \

&& while read url; do

xdotool getactivewindow \

key ctrl+l type "$url" \

&& xdotool getactivewindow \

key Return sleep 5 \

key ctrl+f sleep 0.5 \

type 'free software' \

&& import -window "$( xdotool getactivewindow )" \

-crop 125x20+570+570 png:- \

| tesseract -psm 3 - - \

| grep -q ^Phrase && echo "$url" \

done < url-list | tee results.txtNotes B_noteNH

Okay, for this final example, I'm going to do something a bit more complex. Please take a few seconds to look at this. <pause>

xdotool is a swiss army knife of X11 operations.

It can find windows,

give them focus,

send keystrokes,

move the mouse,

and do many other things.

The first line here finds the first window ending in ``GNU IceCat'' and

gives it focus.

--sync blocks until that completes so we don't proceed too hastily.

We then proceed to resize it to 1024x600,

and then open a new tab by sending Ctrl+T.

That's right. We've come full circle back to the web browser.

We then proceed to read each URL form a file url-list,

which you can see after done.

For each URL,

we send Ctrl+L to give focus to the location bar and then type in the

URL.

We can't chain any commands in xdotool after type,

which is why we have another invocation to hit Return.

We then sleep for five seconds to give time to navigate.

I use Tor,

so latency varies.

We then send Ctrl+F,

wait very briefly to give IceCat time to trigger the find,

and then send our string to search for.

If you remember,

I mentioned that part of the problem with the GUI approach is that it

requires visual inspection.

Fortunately for us,

we have tesseract!

So we take a screenshot of the IceCat window at the offset where the match

results are on my system.

We pipe that image to tesseract and,

if it contains the word ``Phrase'',

as in ``Phrase not found'',

we echo the URL as a non-match.

All of this is tee'd to results.txt like before.

This is not what I had in mind when I talked about melding mind and machine. But this shows that, even with GUIs, we can produce some level of automation using existing tools and a little bit of creativity. Of course, this may not work on your system if your font size is different or because of various other factors; to generalize this, we'd have to get more creative to find the result text.

It's also worth mentioning that xdotool can come in handy for the password

manager too—instead

of copying to the clipboard,

we can type it directly into a window.

And by specifying which window to send it to,

we can ensure that we don't accidentally type in the wrong window,

like a chat,

if the user changes focus.

That also thwarts systems that implement the terrible anti-pattern of try to

prevent you from pasting passwords.

Getting Help B_frame

- All GNU packages have Info manuals and

--help - Most programs (including GNU) have manpages

$ grep --help # usage information for grep

$ man grep # manpage for grep

$ info grep # full manual for grep

# bash help

$ help

$ man bashNotes B_noteNH

I have presented a lot of stuff here, and I only got to a fraction of what I would have liked to talk about. Some of you watching this aren't familiar with a lot of these topics, or possibly any of them, so getting started may seem like a daunting task. Where do you start?

As I showed before,

the process of writing a command line is an iterative one.

Learning is the same way.

I don't remember all of the options for these programs.

xdotool I had used only lightly before this talk,

for example;

I had to research how to write that command line.

But I didn't use the Internet to do it;

I did it from the comfort of my terminal using only what was already

installed on my system.

You can usually get usage information for programs by typing --help after

the command.

This will work for all GNU programs,

but not all command line programs implement it.

All GNU programs also offer Info manuals,

which read like books;

those can be found with the info command,

and can also be read in a much better format using Emacs.

You can also usually find them online in HTML format.

Most command line programs for Unix-like operating systems also include

manpages,

accessible with the man command.

If you want to know how to use man,

you can view its own manpage using man man.

You can also get some help using Bash itself by simply typing help to list

its builtins,

and it has a very comprehensive manpage.

This is obviously a bit different than how people interact with GUIs, which are designed to be discoverable without the need for users to read documentation.

Thank You B_fullframe

Thank you.

Mike Gerwitz

\bigskip

Slides and Source Code Available Online

<https://mikegerwitz.com/talks/cs4m.pdf>

\bigskip

\vfill

Licensed under the Creative Commons Attribution ShareAlike 4.0 International License